Github uses Linguist, a Ruby library, to help detect which programming language is in a given file. Recently, an issue was filed that indicated that Linguist incorrectly classifies Mercury (a programming language) files as Objective-c since they both use the same extension (.m). Linguist’s primary method for language detection is a file’s extension - a method that fell short for Mercury. If Mercury were added to Linguist, then there would be two languages with the same extension - and this is where things get interesting. If two languages share the same extension, or the file does not have an extension, Linguist has 3 methods for guessing the language. First it checks if the file has a shebang (#!/bin/sh). If there is no shebang the second method it uses is a set of heuristics. For instance, if the file includes the “:-” token it concludes that the contents are prologue code, or if “defun” is present it’s common lisp. If it still hasn’t found a match the third method it uses is a Bayesian classifier. Roughly speaking the classifier iterates over all of a file’s tokens, and for each token determines the probability that it is present in each programming language. Subsequently, it sums all those probabilities, sorts the results, and returns an array of language-probability pairs (e.g [[‘Ruby’, 0.8], [‘Python’, 0.2]]).

I wondered how logistic regression, support vector machines or even clustering algorithms would help in classifying a given file. As I dived into the data I realized that the descriptive statistics on tokens, and even ascii faces, would be nearly as interesting as their predictive power. Thus, this post will summarize the descriptive statistics, while in my next post I’ll cover using tokens to predict a file’s programming language.

Methods

Fetching The Code

Using Github’s API I fetched the 10 most popular repositories for 10 languages (c, haskell, go, javascript, java, lua, objective-c, ruby, python and php). Those 10 were chosen for their popularity, differing paradigms (e.g. Haskell vs Java), differing syntax (Haskell vs go) and overlapping syntaxes (C, JavaScript and Java). After retrieving a list of 100 repositories I downloaded the zip ball for each repo.

Tokenizing The Code

First, a list of common programming tokens (eg: ; , . ( ), etc) was created - tokens that would be found in many of the 10 languages of interest. Using those tokens I created a tokenizer that would output an object with the keys being tokens and their values being the number of times that the token occurred in the file. Base 10 numbers, hex numbers, strings (double quotes) and ‘characters’ (single quotes) were treated as 4 different token types. This was done so the number 8 was not treated as a different token from the number 44 (they are both tokenized as “numbers”).

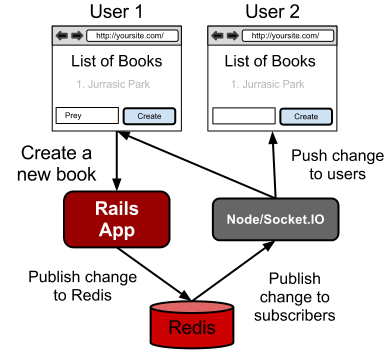

Each of the 100 repositories was traversed and non binary files were tokenized, with each file’s total token count stored in Redis’ sorted set data structure (sorted by number of occurrences). Using a sorted set made it trivial to retrieve the 1000 most common tokens from all 100 repositories. Each file was then re-tokenize, but only tokens that were present in the 1,000 most common token list were counted. A data set was created that included information on 65,804 files from 100 different repos. Along with the token data, the following data was also recorded: 1) the file’s extension, 2) it’s path within the repository, 3) it’s shebang, if present and 4) the token count for the first 250 most common tokens (I decided to limit my analysis on a smaller number of tokens for the first round of analysis). Finally, the counts for each token were converted to the ratio that that token occurred relative to the total occurrences. of all tokens (e.g. # of periods/total number of all tokens). The absolute number of tokens per file would be skewed by large files, which have more tokens.

Counting Smiley Faces

Two ease analysis I focused on c and JavaScript files (in c and JavaScript repositories): both languages have identical single and multi-line comment syntax. First, text from comments was separated from code. Second, the number of times an ascii face appeared in a given file’s comments was counted. The following “faces”: :( :) :-) :-D :p ;) ;-) were counted.

Statistics

All statistics were carried out using R. Welch Two Sample t-tests were used to compare groups.

Results

Tokens

While the top 1,000 tokens, by occurrence were recorded, only the top 20 are presented in Table 1 (see here for the top 1,000). Not surprisingly numbers are the most prevalent token, with commas coming a very close second. Interestingly, and what sparked my interest in ascii smiley faces, is that there are slightly more right parentheses than left. While the contents of strings were ignored, the contents of comments were not. Seeing as most (all?) of the analyzed languages require parenthesis to be balanced I presumed that the imbalance might be caused by ascii smiley faces in comments.

Interestingly, hexidecimal numbers were the 6th most common token despite rarely being used outside of c. While hex numbers are used extensively in CSS, I only classified numbers that started with ‘x’ as being hex, which precluded the hex numbers in CSS from being included.

Table 1. Top 20 Tokens By Occurence

| Token | Occurrences |

|---|---|

| Numbers | 19,640,325 |

| , | 19,597,223 |

| ) | 10,446,695 |

| ( | 10,425,221 |

| ; | 7,882,261 |

| Hex Numbers | 6,261,887 |

| * | 6,205,697 |

| . | 5,978,092 |

| = | 5,841,844 |

| Strings (Double Quotes - DQ) | 4,336,520 |

| } | 3,310,463 |

| { | 3,305,033 |

| / | 2,939,954 |

| : | 2,640,872 |

| -> | 2,425,261 |

| # | 2,423,779 |

| [ | 2,004,437 |

| ] | 2,002,711 |

| < | 1,591,276 |

| Strings (Single Quotes - SQ) | 1,578,686 |

The top 20 tokens, by occurrence in aggregate (across 65,804 files).

Table 2 shows the top 20 tokens and their ratios (token/all tokens in a given file) in 16 different file types. Not surprisingly JSON files lead the pack for double quoted strings, curly brackets, colons and commas. Likewise, Clojure leads by having the highest proportion of parentheses. The right arrow -> occurred most often in Php, C and Haskell. Finally, square brackets were very prevalent in Objective-C.

Table 2. Top 20 Tokens (Scroll Right For Full Table)

| File Type | # Files | Numbers | , | ) | ( | ; | Hex Numbers | * | . | = | Strings (DQ) | } | { | / | : | -> | # | [ | ] | < | Strings (SQ) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| .php | 10289 | 0.0106 | 0.0280 | 0.0634 | 0.0632 | 0.0406 | 0.0000 | 0.0463 | 0.0257 | 0.0172 | 0.0054 | 0.0219 | 0.0218 | 0.0187 | 0.0077 | 0.0239 | 0.0004 | 0.0042 | 0.0042 | 0.0126 | 0.0375 |

| .json | 1002 | 0.0183 | 0.1510 | 0.0001 | 0.0001 | 0.0000 | 0 | 0.0001 | 0.0011 | 0.0001 | 0.3575 | 0.0927 | 0.0930 | 0.0000 | 0.2163 | 0 | 0.0001 | 0.0179 | 0.0179 | 0.0001 | 0.0001 |

| .md | 1541 | 0.0317 | 0.0238 | 0.0250 | 0.0248 | 0.0030 | 0.0001 | 0.0146 | 0.0559 | 0.0063 | 0.0108 | 0.0039 | 0.0038 | 0.0012 | 0.0251 | 0.0006 | 0.0251 | 0.0127 | 0.0127 | 0.0066 | 0.0064 |

| .hs | 6133 | 0.0416 | 0.0224 | 0.0426 | 0.0424 | 0.0014 | 0.0002 | 0.0025 | 0.0253 | 0.0407 | 0.0121 | 0.0123 | 0.0123 | 0.0006 | 0.0051 | 0.0179 | 0.0229 | 0.0090 | 0.0091 | 0.0019 | 0.0060 |

| .html | 1579 | 0.0211 | 0.0091 | 0.0117 | 0.0116 | 0.0154 | 0.0000 | 0.0007 | 0.0252 | 0.0463 | 0.0482 | 0.0295 | 0.0295 | 0.0033 | 0.0099 | 0.0003 | 0.0270 | 0.0016 | 0.0016 | 0.1150 | 0.0052 |

| .css | 701 | 0.0811 | 0.0212 | 0.0083 | 0.0083 | 0.0676 | 0.0000 | 0.0219 | 0.0564 | 0.0023 | 0.0050 | 0.0392 | 0.0394 | 0.0169 | 0.0779 | 0 | 0.0194 | 0.0011 | 0.0011 | 0.0001 | 0.0018 |

| .js | 9089 | 0.0290 | 0.0681 | 0.0646 | 0.0639 | 0.0436 | 0.0005 | 0.0075 | 0.0574 | 0.0239 | 0.0328 | 0.0226 | 0.0220 | 0.0043 | 0.0203 | 0.0000 | 0.0004 | 0.0086 | 0.0086 | 0.0013 | 0.0251 |

| .py | 3081 | 0.0211 | 0.0483 | 0.0539 | 0.0537 | 0.0008 | 0.0001 | 0.0024 | 0.0670 | 0.0364 | 0.0213 | 0.0018 | 0.0018 | 0.0003 | 0.0278 | 0.0000 | 0.0294 | 0.0092 | 0.0093 | 0.0008 | 0.0376 |

| .c | 25043 | 0.0536 | 0.0560 | 0.0573 | 0.0573 | 0.0555 | 0.0077 | 0.0469 | 0.0248 | 0.0276 | 0.0110 | 0.0156 | 0.0155 | 0.0174 | 0.0050 | 0.0194 | 0.0120 | 0.0053 | 0.0053 | 0.0078 | 0.0017 |

| .xml | 1788 | 0.0127 | 0.0055 | 0.0022 | 0.0022 | 0.0026 | 0.0000 | 0.0005 | 0.0146 | 0.0925 | 0.0993 | 0.0014 | 0.0014 | 0.0191 | 0.0315 | 0.0000 | 0.0060 | 0.0005 | 0.0005 | 0.1242 | 0.0006 |

| .clj | 83 | 0.0183 | 0.0064 | 0.0946 | 0.0944 | 0.0529 | 0 | 0.0014 | 0.0462 | 0.0034 | 0.0239 | 0.0049 | 0.0049 | 0.0001 | 0.0189 | 0.0026 | 0.0015 | 0.0339 | 0.0339 | 0.0001 | 0.0005 |

| .rb | 8527 | 0.0229 | 0.0479 | 0.0264 | 0.0263 | 0.0013 | 0.0001 | 0.0018 | 0.0539 | 0.0148 | 0.0510 | 0.0062 | 0.0062 | 0.0009 | 0.0446 | 0.0002 | 0.0166 | 0.0086 | 0.0087 | 0.0141 | 0.0530 |

| .java | 12293 | 0.0157 | 0.0262 | 0.0488 | 0.0488 | 0.0433 | 0.0001 | 0.0525 | 0.0930 | 0.0104 | 0.0118 | 0.0186 | 0.0185 | 0.0137 | 0.0032 | 0.0000 | 0.0009 | 0.0018 | 0.0018 | 0.0050 | 0.0005 |

| .go | 1259 | 0.0265 | 0.0569 | 0.0684 | 0.0684 | 0.0043 | 0.0029 | 0.0108 | 0.0688 | 0.0111 | 0.0370 | 0.0340 | 0.0339 | 0.0009 | 0.0104 | 0.0000 | 0.0002 | 0.0106 | 0.0106 | 0.0014 | 0.0017 |

| .lua | 3935 | 0.0342 | 0.0783 | 0.0526 | 0.0525 | 0.0020 | 0.0008 | 0.0017 | 0.0463 | 0.0634 | 0.0448 | 0.0173 | 0.0171 | 0.0008 | 0.0166 | 0.0001 | 0.0013 | 0.0158 | 0.0157 | 0.0010 | 0.0193 |

| .m | 742 | 0.0333 | 0.0261 | 0.0530 | 0.0530 | 0.0580 | 0.0001 | 0.0201 | 0.0327 | 0.0246 | 0.0181 | 0.0190 | 0.0190 | 0.0049 | 0.0353 | 0.0003 | 0.0120 | 0.0345 | 0.0345 | 0.0018 | 0.0005 |

The ratio of a specific tokens, relative to all tokens in a file, by file type. Only the top 16 file types are present in this table - there is a very long tail of file types. I restricted this table to file types that are relatively abundant in this dataset.

ASCII Faces

To examine the discrepancy between left and right parenthesis I created a set of scripts to separate comments from code, in c and JavaScript files. Second, I then analyzed the comments and counted the number of ascii faces that appeared. I focused on 6 different types of smiley faces and I included 1 type of frown (See Table 3 for types of ascii faces and amount found).

While there were more frowns in JavaScript files, the difference wasn’t statistically significant. Furthermore there was not a statistically significant difference between the total smiley faces between ‘.c’ and ‘.js’ files. However, there were more smiley faces in files that were in “JavaScript” github repos. For instance, Node is a JavaScript repo but includes both JavaScript and c files. This makes sense that the project, with its distinct maintainers, rules and conventions is more important in determining the number of smiley faces.

Eighty percent of c files analyzed were found in the Linux repository, so it made sense to focus on Linux specifically. In Linux c comments I found 631 smiley faces and 73 frowns. In linux the most prevalent smiley faces was `:-)` followed by `:)` (See Table 3.).

Table 3. Ascii faces

| Linux c files (20,060) | c files (24,542) | JavaScript files (6,743) | |

|---|---|---|---|

| All Smiley Faces | 0.0315 (631) | 0.0577 (1415) | 0.0721 (486) |

| Frowns :( | 0.0036 (73) | 0.0051 (124) | 0.0249 (168) |

| :) | 0.0088 (172) | 0.0081 (198) | 0.0027 (18) |

| :-) | 0.0088 (176) | 0.0284 (697) | 0.0001 (1) |

| :-D | 0.0001 (2) | 0.0001 (3) | 0.0006 (4) |

| :p | 0.0051 (102) | 0.0120 (295) | 0.0475 (320) |

| ;) | 0.0044 (89) | 0.0048 (117) | 0.0212 (143) |

| ;-) | 0.0044 (90) | 0.0043 (105) | 0.0000 (0) |

The first value is the number of times the ascii faces appears, relative to other tokens, while the value in brackets is the total number of times that it appears in all files. Linux c files are a subset of the c files.

Discussion

Shortcomings

Despite including 100 different repositories, Linux source files represented 30% of all files in this analysis. Ideally, the number of files from each repository, and language, would be balanced. One approach would be to randomly select a numerically identical subset of files from each language. While this approach might be valid statistically it wouldn’t produce descriptive statistics on each repository, just a subset of files within each repository. Alternatively Linux could be excluded from the analysis since the number of files it contains is an outlier, relative to the other repositories.

In all files there were 21,474 more right parenthesis than left. Given that c and JavaScript files represent nearly half of all files in this analysis, and they only had 1,901 smiley faces, it’s unlikely that the other half of the files had a nearly 20,000 smiley faces - or enough to account for the left and right parenthesis. Future analysis could attempt to locate the source for this difference (presumably within comments).

Conclusions

It is not surprising that ratios of token types can differ dramatically between different languages, however, I was surprised that several tokens (parenthesis, square & curly brackets) did not occur equally. While smiley faces can account for part of this discrepancy, they most likely do not account for all of it.

The biggest surprise was that number of smiley faces per file was not statistically different between JavaScript and C. Being low level I presumed that C code would be more serious, with fewer ascii faces. Interestingly, I was wrong and C code has a similar amount of smiley faces relative to JavaScript.

Viewing Table 2 we can start to see some patterns and differences in token ratios that might help to predict a file’s language. For instance, JSON has very different token ratios than C. In the next article I will explore the power that tokens have in predicting which programming language is being used in a given file.

If you’re interested in replicating the analysis, or obtaining the dataset, please see the links below: