I was among many Canadian developers who where happy to hear that Stripe had come to Canada. We were planning on adding subscriptions to Understoodit.com, so the timing couldn’t have been better. We assumed that it would take a couple of days to integrate Stripe, but it actually took @davidmisshula and me 7 days. The process gave me some insights that I thought I’d share.

I’ve heard that Stripe is significantly easier to integrate than its competitors, however, having never integrated a payment system I have no comparison. Overall I think Stripe is excellent and I’m glad we chose it, however, there are 4 areas, that if improved, would make Stripe perfect: 1) getting started, 2) taxes, 3) invoices and 4) edge cases.

Getting Started

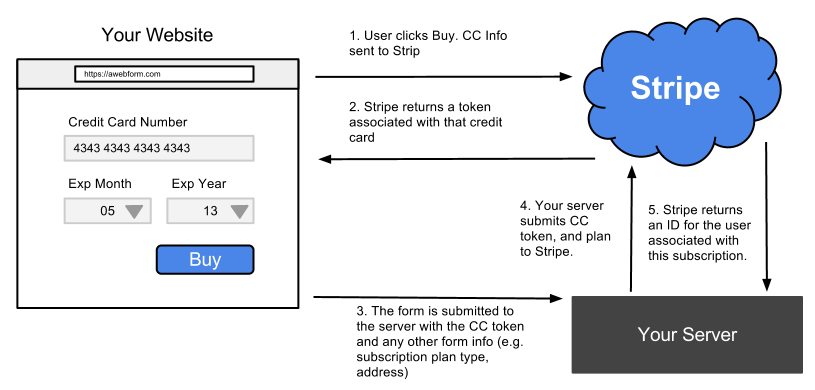

Having never integrated a payment system into a website I wanted to be as careful as possible. I wanted to make sure that I understood as many details as possible, and had time to carefully map out all the things that needed to be done before pushing it to production. What I would have really liked was a diagram of how Stripe worked. A good diagram is much easier for me to parse, and would have helped me to understand the text documentation much quicker.

For instance, below is one possible diagram that would show the potential set of events associated with a new user signing up for a subscription plan.

Taxes

Understoodit is based in Canada, obligating us to collect taxes from Canadians, but not our international customers. The kicker is that different provinces have different tax rates. In total there are 4 different tax rates and then no taxes for international customers, for a total of 5 different tax levels. We currently have 3 monthly plans, and their 3 yearly equivalents. This means that we had to create (3 + 3) x 5 = 30 plans within Stripe.

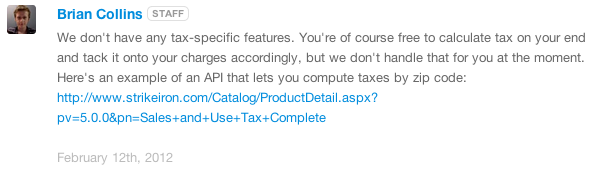

It would have been significantly easier if Stripe had had a ‘tax’ feature, in the same way they have coupons. If such a feature existed we’d only have had to create 6 plans in Stripe and 4 tax levels. This would result in Stripe invoices including the subtotal, amount of taxes and total (one less thing for us to calculate). While Stripe does a great job of prorating payments, when a user switches from one plan to another, it becomes tricky for us when they switch province. While calculating taxes isn’t that difficult for us to do, it’s just one more thing we have to get right. It’s one more thing we have to calculate when sending an invoice to our customers.

Invoices

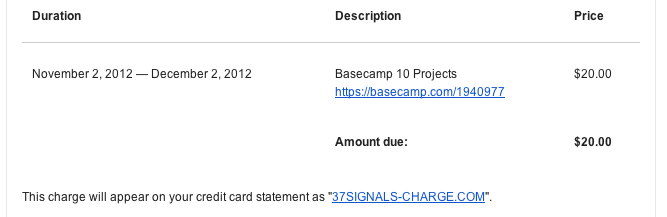

Every online subscription that I currently have sends me a simple invoice at the end of each billing period. Some have .pdfs attached that I can easily send to an accountant. Additionally, those services include admin panels that list all the past invoices and those can easily be downloaded as pdfs.

It would be amazing if Stripe handled 1) emailing invoices and 2) creating a .pdfs of each invoice. Developers could provide simple HTML templates for email and pdf invoices and Stripe would fill in the blanks and send the invoice at the correct time, to the correct user. I suspect people would be willing to pay extra for this feature.

Edge Cases

Like any feature, there are multiple edge cases to a payment system. For instance here are a few that we dealt with:

- Upgrade plan, and simultaneously change provinces (e.g. tax rate)

- Downgrade plan and simultaneously change provinces (e.g tax rate)

- Change credit card info, but not billing address

- Change plan, but keep credit card and billing info the same

Stripe employees can no doubt enumerate many more edge cases than someone adding Stripe for the first time. It would be helpful if they provided a checklist of edge cases for developers. The checklist could also include common security concerns that developers should understand before rolling payments out.

Conclusions

With all the hype on Hacker News, I had this vision of Stripe being magically easy to integrate. While it wasn’t rocket science it was certainly more effort than I envisioned. The whole process was made significantly more difficult by having to handle taxes.

I wonder if there would be value in Stripe conducting the following usability experiment. Get a dozen computer science and engineering undergrads and ask them each to integrate Stripe into an existing website. Stripe employees would sit with them and monitor their progress (or lack of), but provide no help. I suspect that they’d find some surprises in how novices approach Stripe integration. The information gained in such an experiment could help them create better documentation, reduce development burden and ultimately create a more perfect Stripe.